Jon Rawski

Assistant Professor of Linguistics

About

I teach courses on general and computational linguistics at San Jose State University. I generally study the mathematics of human language and learning. My work places mathematical boundary conditions on the grammars underlying human language and how they can be learned. These properties reflect humans’ unique neuronal structure and computational power, contributing foundational principles for the cognitive and computer sciences.

Interests

- Mathematical Linguistics

- Cognitive Science

- Theoretical Computer Science

Recent Publications

I try to make my work accessible following the FAIR Principles, on this website or some other repository.

We show some divergences in expressive power when transformers are used as probabilistic and autoregressive sequence models.

We characterize transformer sequence models as transducers and show some expressivity bounds for several variants.

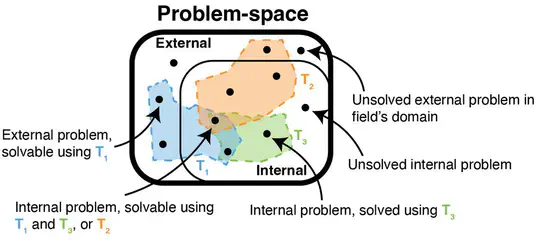

We give a pragmatic problem-centered account of theories’ epistemic virtues, like coherence, depth, and parsimony.

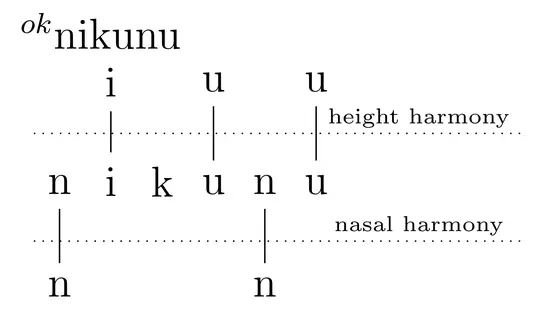

The Computational Power of Harmonic Forms

We overview vowel harmony computationally, describing necessary and sufficient conditions on phonotactics, processes, and learning.

Regular Reduplication Across Modalities

We show that reduplication in signed languages respects the upper bound of regular functions seen in spoken languages